|

Gokul M K I'm a Dual Degree student in Robotics at the Indian Institute of Technology Madras. I work with Prof. Anuj Tiwari at the DiRO Lab on Multi-agent Quadruped Robots. I also collaborate with Prof. Arvind Easwaran at the CPS Research Group, NTU Singapore, where I focus on trustworthy learning agents for F1TENTH Autonomous Racing. I worked as an AI Research Intern at Qneuro India Pvt Ltd, developing deep learning models for inferring EEG, PCG Signals. I interned at HiRO Lab, IISC Bangalore, where I focused on learning for bimanual robot manipulation. I was part of Team Anveshak, the student-run Mars Rover team at IIT Madras that competes in international rover competitions. I initially contributed as an Embedded Engineer before being promoted to Electronics and Software Lead. |

|

Research InterestsI explore Reinforcement Learning, Deep Learning, Generative AI, LLMs and Robotics. My research interests lie in developing intelligent, model-based, heirarchical controllers for robotic applications. In parallel, I explore advancing reasoning capabilities in LLMs and fine-tuning them using RLHF and SFT. |

|

|

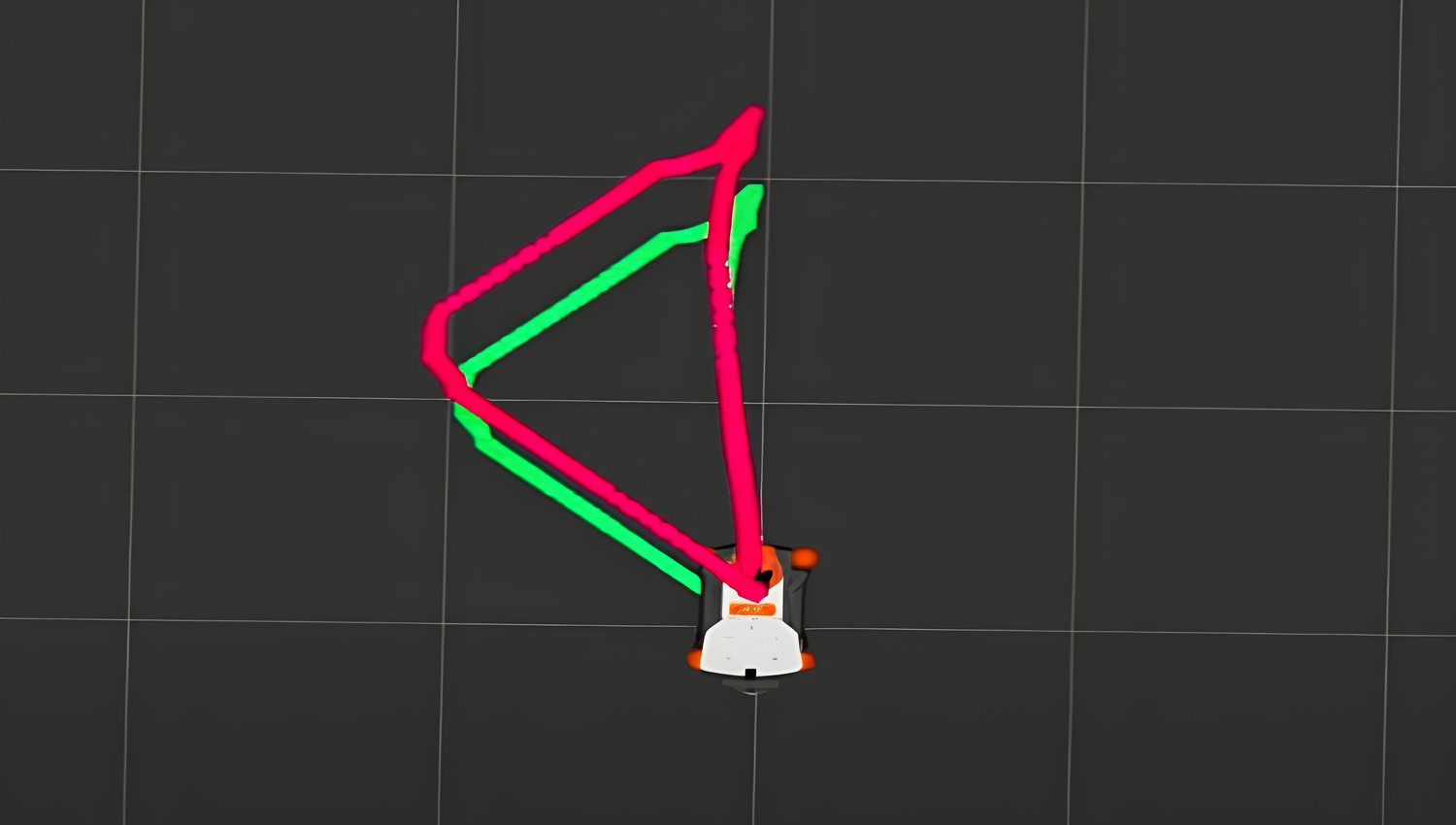

Residual Reinforcement Learning for F1TENTH Racing

NTU Global Connect Fellow (2025) Gokul M K, Eduardo de Conto, Subrat Prasad Panda, Arvind Easwaran Improving the baseline tracking controller with residual Reinforcement Learning. Generalization across various racing tracks, static and dynamic obstacles. |

|

|

Locomotion controller for Legged Robots on Uneven Terrains

ISRO Gokul M K, Anandhakrishnan, Suraj Kumar (ISRO) Testing the MIT convex MPC framework for quadruped locomotion with various gaits. Addressing the limitations of the controller by data-driven Koopman method and learning the residual using Reinforcement Learning. |

|

|

Cooperative Payload Transport using Multiple Quadrupeds in Uneven Terrain

Final Year Project Gokul M K, Anuj Tiwari Developing an heirarchical learning-based controller for intelligent cooperative payload transport by a group of quadrupeds in varying terrains. |

|

|

Reinforcement Learning for Dynamic Swarm Navigation

Inter-IIT Techmeet 13.0, PS Team Lead Gokul M K Implemented a Decentralized Training and Decentralized Execution (DTCE) approach for tackling swarm navigation in continously evolving environments. |

|

|

Modulated Dynamical Systems for Coordinated Bimanual Manipulation

HiRO Lab, Robotics Summer Intern, RBCCPS IISC Bangalore Gokul M K, Dr. Ravi Prakash Implemented the research by LASA Lab, EPFL on modulated dynamical systems for Coordinated Bimanual Robotic Manipulation. Also worked on implementing Tossingbot by learning the residual physics for throwing. |

|

|

Exploring Various Robotic Grasping Algorithms

e-Yantra Summer Intern, IIT Bombay Gokul M K, Archit Jain, Jaison Jose, Ravikumar Chaurasia Compared various learning-based, analytical grasping algorithms and benchmarked their perfomance in simulation and hardware. Formulated a light-weight grasping algorithm using Euclidean clustering. |

|

Project Groot - Designing and Training Transformer LM from First Principles

Ongoing Open Source* Gokul M K A research-focused collection of from-scratch Transformer language models, exploring training stability, scaling behavior, and instruction fine-tuning. Currently, fine-tuning grootTiny (120 M) parameter model and pre-training grootSmall. |

|

|

Implicit Reinforcement Learning without Interaction at Scale

DA7400, Recent Advances in Reinforcement Learning Keerthivasan M, Gokul M K Addressing sub-optimality and diversity challenges in Offline-RL trained with large datasets collected for long-horizon tasks. Employed a heirarchical framework and validated its performance. |

|

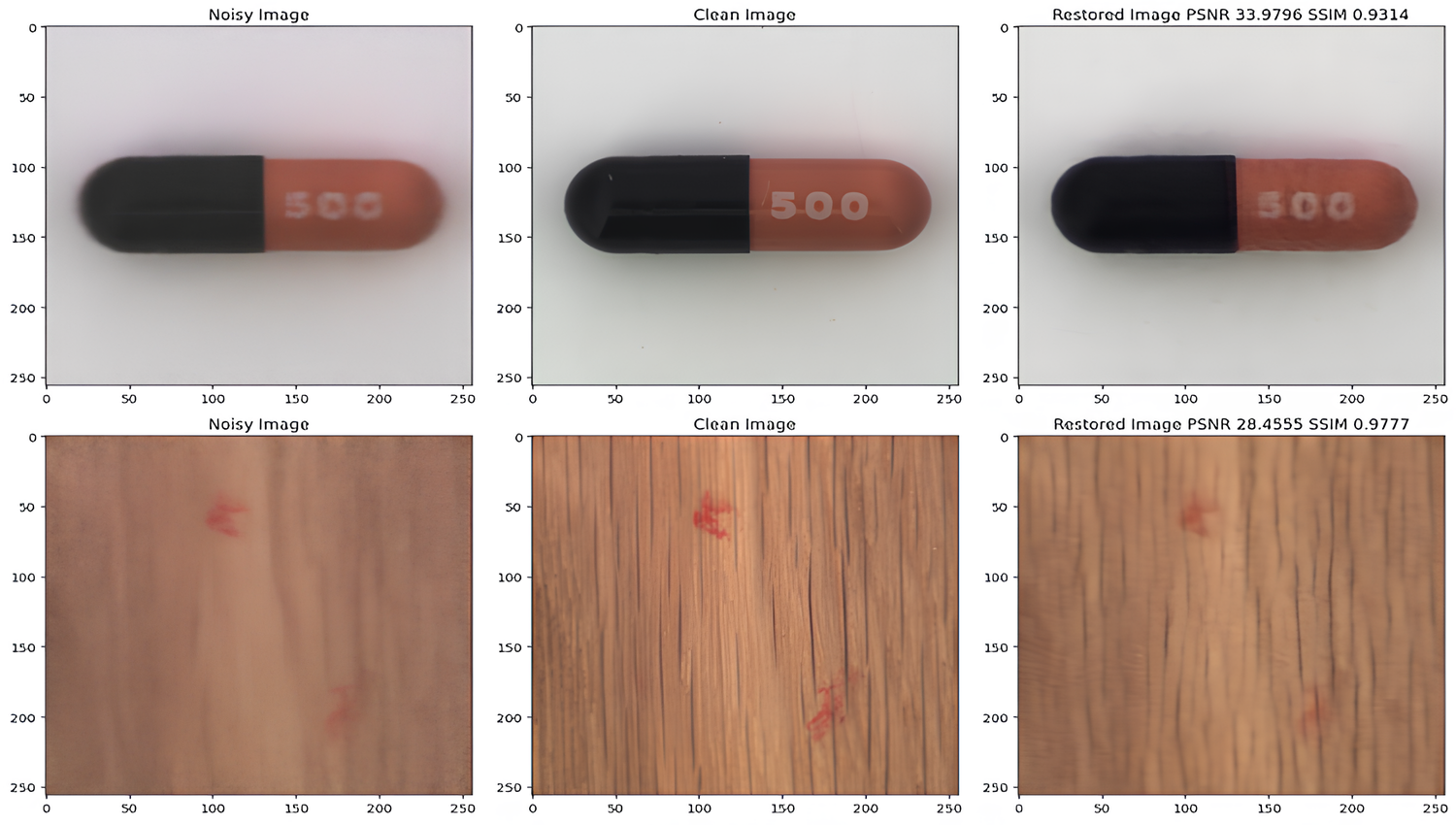

Denoising and Deblurring MVTEC AD Dataset

EE5179, Deep Learning for Imaging Gokul M K, Keerthivasan M Optimized RIDNet, a deep learning model to denoise and deblur MVTEC dataset. It achieved a PSNR of 32.8 on the training examples and 34.78 on the test examples. Benchmarked the results with other architectures. |

|

Comparative Study of SMDP and Intra-Option Learning in the Taxi Domain

CS6700, Introduction to Reinforcement Learning Gokul M K, Keerthivasan M Compared two option learning methods, SMDP and Intra Option Q learning in the taxi gym environment. Intra-Option made faster updates while an action is taken rather than waiting for the option to end. |

|

Trajectory Continuous Optimal Planning for a Mobile Manipulator

ED5215, Introduction to Motion Planning Gokul M K, Keerthivasan M Continuously tracing a trajectory using RRT* while minimizing the deviation in the end-effector pose through an Optimal control formulation. This shares similarities with 3D printing task by mobile manipulators. |

|

e-Yantra Robotics Competition 22-23

IIT Bombay Gokul M K, Nikhil S Programmed a Mobile Manipulator to identify, pluck and place coloured bell peppers inside a greenhouse. |

|

Website source code from jonbarron |